“Data-Driven Thinking” is written by members of the media community and contains fresh ideas on the digital revolution in media.

Today’s column is written by Augustin Amann, Chief Product officer at S4M

As a part of our industry soul-searching around the future of audience targeting in a post-cookie/IDFA world, the notion of data frugality should be at the forefront of everyone’s mind. The central tenet of data frugality is that companies don’t deserve unlimited access to user data. They should employ the least amount of data needed to fulfill their marketing objectives – no less, but no more.

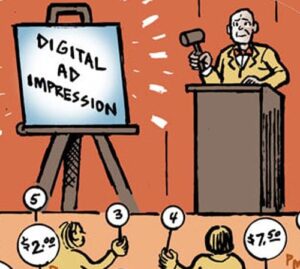

This practice has been easier said than done in the programmatic era. Third-party cookies allowed our industry to assert that 1:1 personalized marketing was good for all. The cookie, in its tantalizing way, actually upended our ability to separate quality from quantity. Much of what third-party cookie-derived audience segments constituted were neither accurate nor particularly insightful. We all drank the Kool-Aid, going all in on practices like retargeting, practices that ultimately led us to the inevitable consumer backlash that put us in the current predicament.

This transitional period requires all of us to take a breath and earnestly reimagine the role of data in marketing. Data frugality should be a critical part of this process. It is important to recognize that data frugality is dependent on a deeper commitment to data utility. We as an industry need to make a concerted effort to shift the focus towards the data which is going to be really useful in meeting our brand objectives, and away from extraneous stuff that won’t meaningfully impact positive campaign results.

Data quality is a concept that differs from project to project and is often inherently subjective. That is no excuse to not be more disciplined and thorough when it comes to data processing design moving forward in the post-cookie world. That design should include assessing the following characteristics:

- Is the data recent or rare?

- Is the data reliable?

- Does the data offer valid representation?

- Are the operating costs associated with the data worth the benefits?

I would say that most companies have not asked many, if any, of those key questions in the past. Instead they have been more fixated on algo quality than data quality. That needs to change. We can no longer casually rely on turbo-charged logged-in data of the walled gardens, whose own interests drive all enhancements and changes to their data-gathering.

By taking a more proactive, methodical approach to data harvesting, we will more readily extract the optimal value of the data and save ourselves from storing extraneous data. This will make it easier for all of us to stay GDPR/CCPA/CPRA/CDPA-compliant. Better data hygiene will also reveal that opening the aperture of identity from the individual to the cohort level will not necessarily mean a loss in consumer relevance or engagement.

And for anyone concerned about the possible diminishment in scale, less can actually be more. Employing an increasingly balanced mix of first-party data as well as advanced deployment of contextual and location targeting can make up for the loss in third-party cookie-based data. And there will certainly be fewer brands blowing the lid off of frequency caps by which the wild and woolly days of retargeting were marked.

Change is hard. Uncertainty is scary. But we have no choice. The past year of social and political unrest combined with the unrelenting fury of a global pandemic has led to a societal reckoning. Companies now have to embrace corporate social responsibility in a meaningful way. At its core, data frugality is about corporate responsibility and brands’ respect for consumer privacy. By truly taking that ideal to heart, brands can still succeed in a way that is additive to the world and also sustainable for their businesses.

Follow S4M (@s4mobile) and AdExchanger (@adexchanger) on Twitter.